Don't have time to read? No problem, listen to the audio blog below!

Artificial intelligence has long been a pattern-recognizing workhorse, predicting outcomes, labeling data, summarizing inputs, and generating content. Helpful, yes. Transformative, not quite.

The next leap is agentic AI systems that don't just respond, but act. Agentic AI systems can understand goals, break them down, make decisions, and execute multi-step workflows autonomously. They behave less like tools and more like collaborators.

How is Agentic AI Different Than Generative AI and Predictive AI?

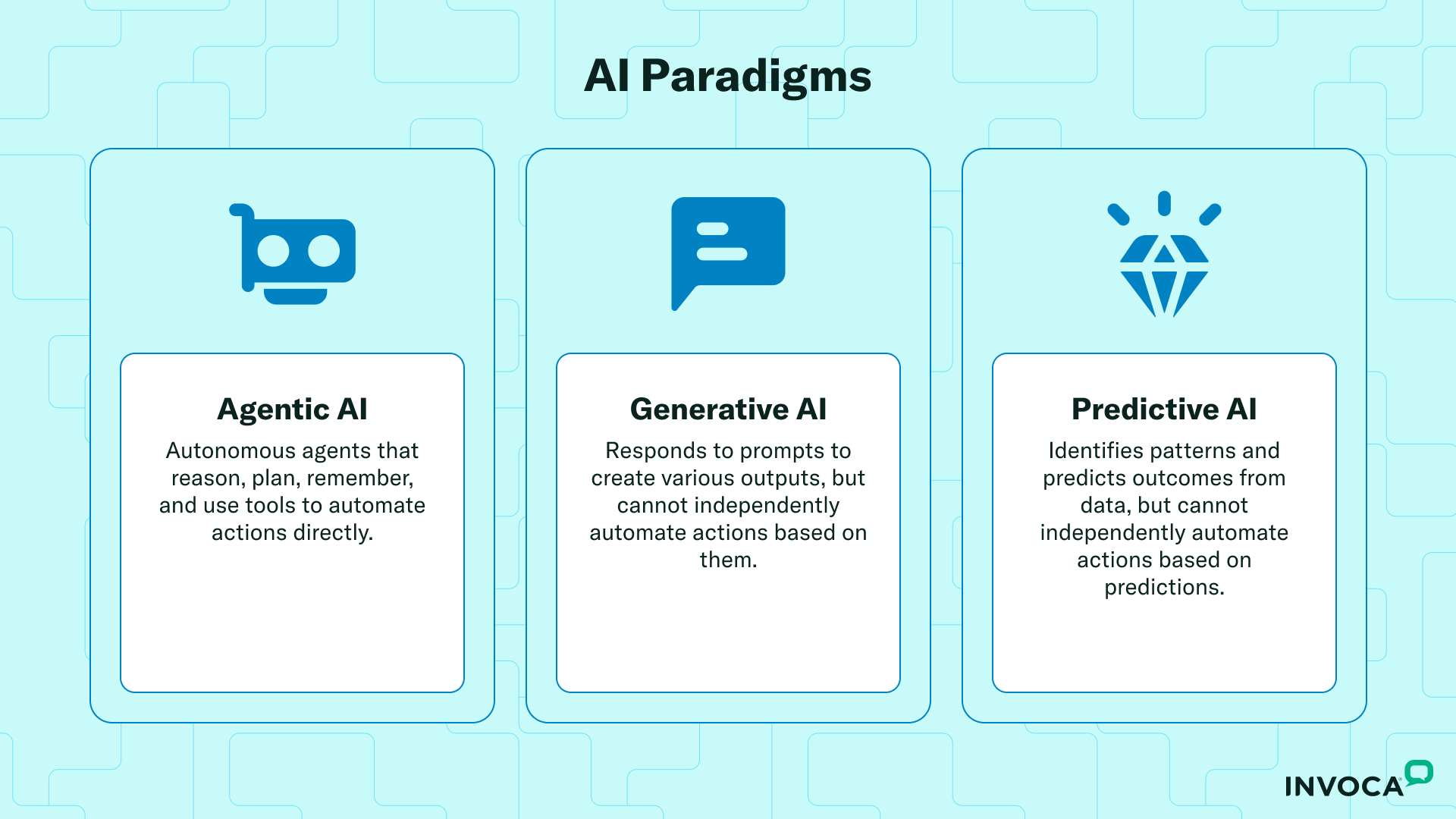

If you've used ChatGPT, Claude, or any other AI chatbot in the past couple of years, you're already familiar with generative AI. You type in a prompt, and the AI generates a response. It's incredibly useful, but generative AI stops at the output.

On the other hand, predictive AI was the first significant wave of applied AI in business. These systems excel at identifying patterns and forecasting outcomes. They can tell you which leads are most likely to convert, which customers are at risk of churn, or which marketing channels will deliver the best ROI. Predictive AI is incredibly valuable because it provides insights that inform better decision-making. But it stops there. Once the prediction is made, humans still have to interpret the results, decide what to do, and take action. Predictive AI is an advisor, not an executor.

Agentic AI is different. Instead of just generating an answer and waiting for your next instruction, agentic AI takes action on your behalf, makes decisions based on changing circumstances, and works toward goals with minimal supervision. Think of it less like a consultant who gives you advice, and more like an assistant who can actually execute the plan.

For example, let's say you ask a generative AI tool to help you plan a vacation. It might give you an itinerary with restaurant recommendations, flight options, and hotel suggestions. But you still have to book everything yourself, check availability, compare prices, and handle all the logistics.

An agentic AI could actually book your flights, reserve hotels, make restaurant reservations, and even monitor prices to rebook if better deals appear, all while keeping you informed about what it's doing. It's taking action in the real world, not just providing information.

The key difference is autonomy and action. Generative AI responds to prompts and creates outputs. Agentic AI can perceive its environment, make decisions, use tools, and take actions to achieve specific goals. It can break down complex tasks into steps, adapt when something doesn't work, and operate across multiple systems without constant human intervention.

Agentic AI doesn’t replace generative AI — it builds on it. Generative models remain an essential capability inside agentic systems, providing reasoning, language understanding, and content creation. The difference is that agentic AI doesn’t stop at producing an output. It uses that output as fuel for decisions, actions, and multi-step workflows. Generative AI provides the intelligence; agentic AI turns that intelligence into execution.

What's required for an AI to be "agentic"?

In short, for an AI to be considered agentic, it must perform tasks with the following:

Autonomy: Agents can operate without continuous prompts.

Agency: They take initiative based on goals, not just instructions.

Reasoning & Planning: Agentic AI breaks down high-level goals — like "optimize spend for quality conversations" — into a sequence of executable steps.

Memory & Reflection: Agentic AI remembers context and evaluates its own performance.

Tool Use: Agents can call APIs, interact with databases, or trigger workflows. These capabilities allow agents to "run" processes rather than simply assist with them.

How Agentic Systems are Structured

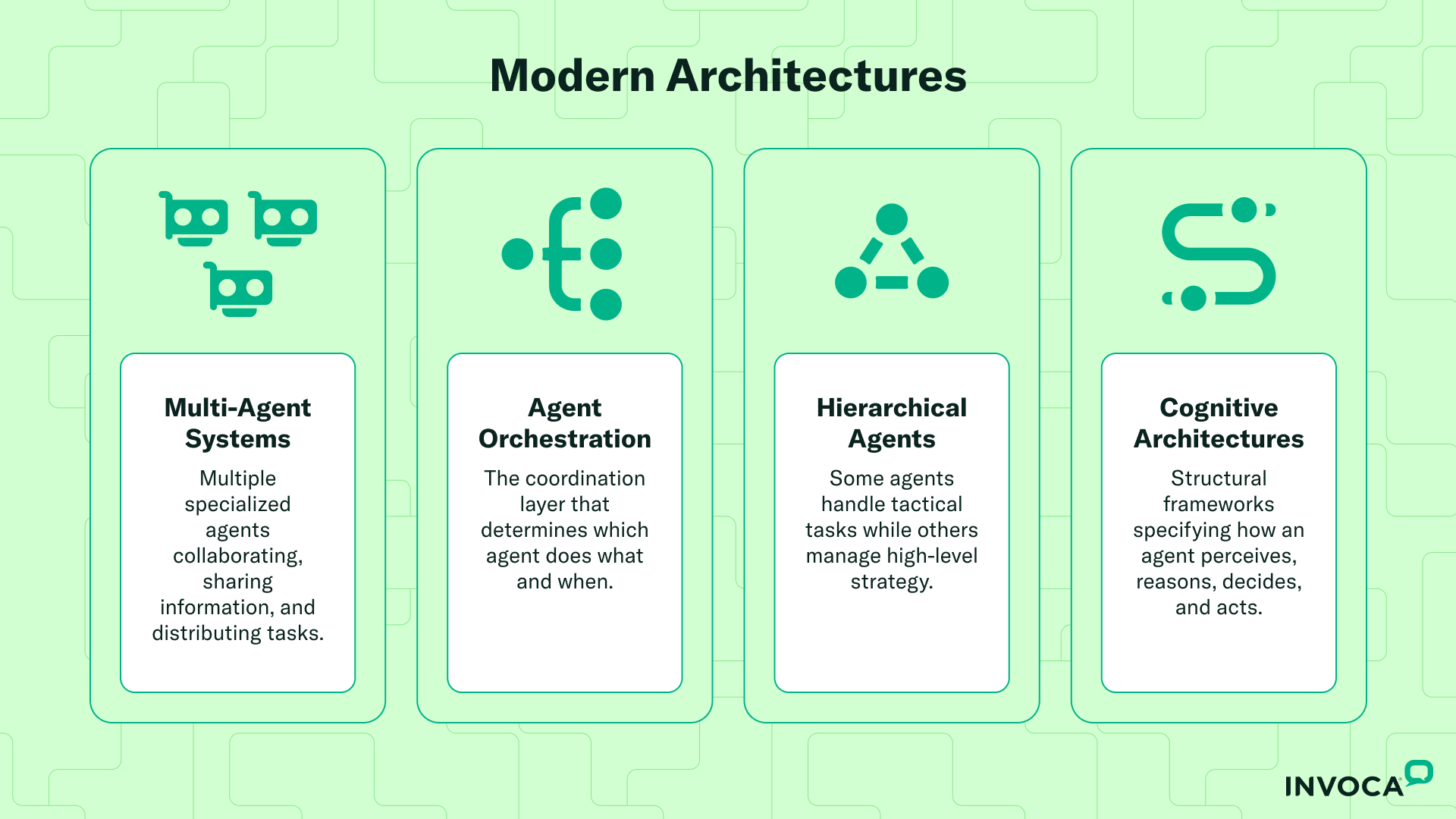

Building effective agentic AI requires orchestrating multiple components that work together. Modern agentic architectures are designed to mirror how high-performing teams operate: with specialized roles, clear coordination, and shared strategic direction.

At the foundation, multi-agent systems represent one of the most powerful architectural approaches. Rather than relying on a single AI to handle every task, these systems deploy multiple specialized agents that collaborate, share information, and distribute work based on their strengths. Think of it like a marketing team, with one person handling analytics, another managing creative, and a third focusing on campaign execution. Each agent has a specific domain of expertise, and together they accomplish more than any individual could alone.

But multiple agents working in parallel isn't enough — they need coordination. That's where agent orchestration comes in. The orchestration layer acts as the project manager, determining which agent should handle which task, when handoffs should occur, and how information should flow between agents. For instance, in a customer journey optimization scenario, the orchestration layer might route inbound call analysis to one agent, sentiment scoring to another, and campaign recommendations to a third, all while ensuring the outputs align and inform each other.

Some problems also benefit from hierarchical agent structures. In these systems, certain agents operate at a tactical level — executing specific tasks like data extraction or API calls — while higher-level agents manage strategy and decision-making. The tactical agents report to the strategic agents, which, in turn, set objectives and constraints. This hierarchy prevents agents from getting lost in the weeds while ensuring that granular execution still aligns with business goals.

Underlying all of this are cognitive architectures — the structural frameworks that define how an agent perceives its environment, reasons about what it observes, makes decisions, and takes action. These architectures specify the "thought process" an agent follows, ensuring it doesn't just react randomly but operates with a consistent methodology.

A well-designed cognitive architecture might include perception modules that gather data, reasoning modules that evaluate options, and action modules that execute decisions, all connected through a feedback loop that allows the agent to learn from outcomes.

The beauty of these layered designs is that they bring discipline and clarity to what could otherwise become chaotic. Agents aren't just doing things — they're working within a structured system that defines roles, responsibilities, and workflows. And for businesses looking to deploy AI at scale, that structure is what distinguishes a proof of concept from a production-ready solution.

How Agents Operate

If you've ever watched a skilled professional tackle a complex problem, you'll recognize the pattern that an AI agent follows. It's a cycle of observation, thinking, action, and adjustment that’s repeated until the goal is achieved. What makes this cycle powerful is that it closely mirrors how humans approach work, which is why AI agents can integrate so naturally into business processes.

The cycle starts with perception. Before an agent can do anything useful, it needs to gather data from its environment. This might involve retrieving data from APIs, querying databases, reading documents, or monitoring system outputs. In a marketing context, perception could involve analyzing call transcripts, reviewing campaign performance metrics, or tracking customer behavior across touchpoints. It could also involve understanding customer intent, such as why they called or what prompted a particular interaction. The key is that the agent isn't passively waiting for someone to hand it data—it's actively seeking out the information it needs to make informed decisions.

Once the agent has context, it moves into reasoning. This is where the agent interprets what it's observed, evaluates possible paths forward, and weighs trade-offs. Reasoning might involve comparing different strategies, predicting outcomes, or identifying patterns in the data.

For example, if an agent is optimizing ad spend, the reasoning might include evaluating which channels deliver the highest-quality leads, which campaigns are underperforming, and where to reallocate budget for maximum impact. Sound reasoning takes more than just crunching numbers—it requires understanding context, constraints, and business objectives.

With a decision made, the agent moves to action. This is where agentic AI diverges most dramatically from traditional AI tools. Actions might include making function calls, updating records, triggering workflows, sending notifications, or even initiating conversations with other agents. The agent isn't just generating recommendations—it's executing them. If the decision is to shift budget from one campaign to another, the agent doesn't wait for approval; it implements the change (within predefined guardrails, of course).

But autonomy doesn't mean operating in a vacuum. That's why human-in-the-loop oversight is built into the cycle. For decisions that carry significant risk, require judgment, or involve ethical considerations, agents defer to humans. This might mean flagging a decision for review, asking for confirmation before proceeding, or escalating when uncertainty is high. The goal is to balance efficiency with responsibility by letting agents handle routine execution while keeping humans involved where it matters most.

Finally, agents engage in iteration. They don't execute once and call it done. They monitor outcomes, evaluate performance, and adjust their approach based on what they learn. If an action didn't produce the expected result, the agent revisits its reasoning, gathers more data, and tries a different path. This iterative loop enables agents to adapt to changing conditions and improve over time. It's also what elevates them from "smart autocomplete" to genuine autonomous workers capable of handling complex, dynamic tasks.

Invoca's Agentic AI Platform

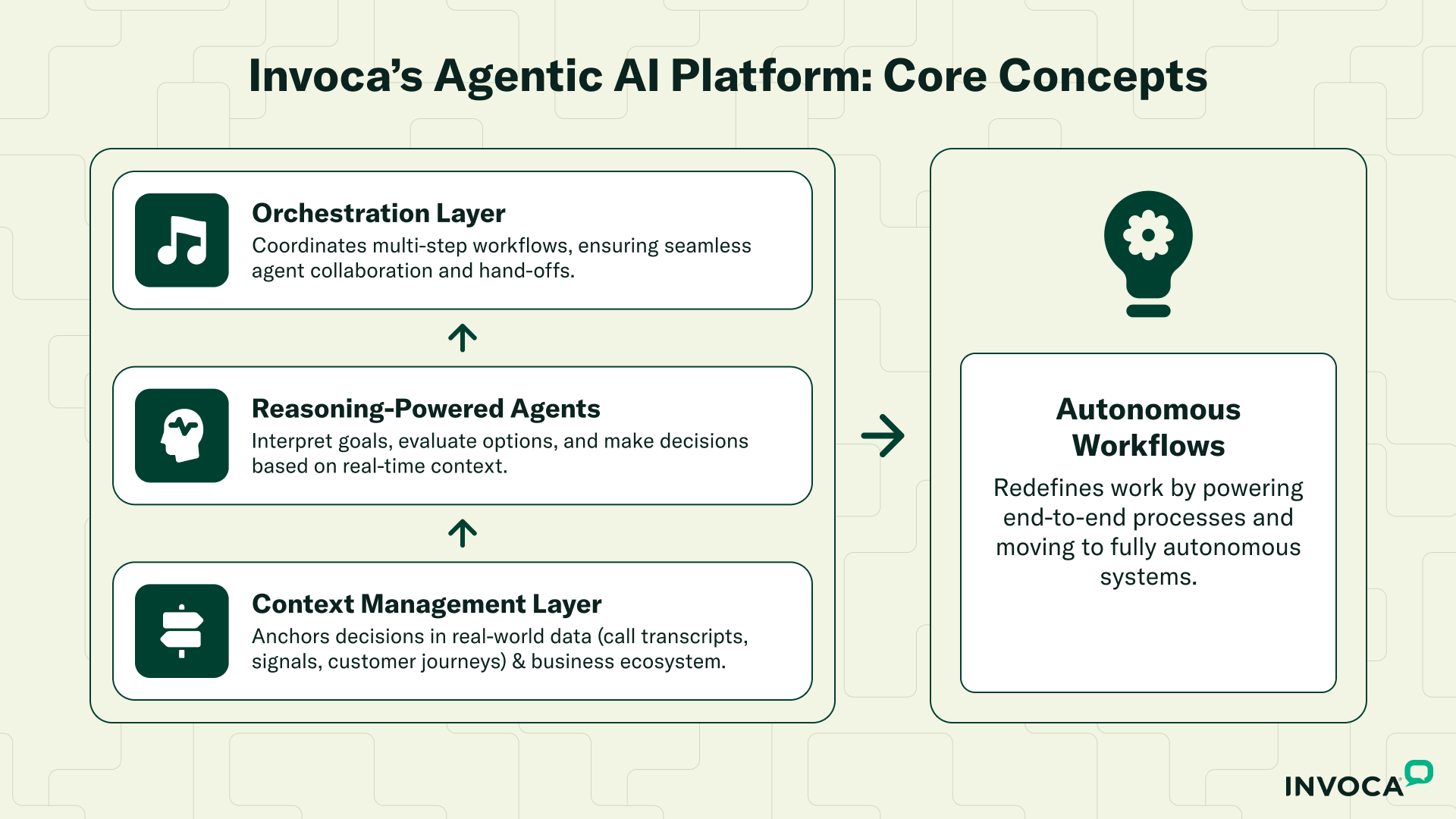

At Invoca, we're not just observing the shift toward agentic AI—we're actively building the infrastructure to make it a reality for our teams and our customers. Our internal agentic AI platform is designed to support the full agent lifecycle, from creation and testing through orchestration and deployment at scale. The goal is to move beyond one-off experiments and establish a production-grade system that can power autonomous workflows across marketing, customer experience, and revenue operations.

At the heart of the platform are reasoning-powered agents that go beyond simple automation. These agents don't just execute predefined scripts — they interpret goals, evaluate options, and make decisions based on real-time context. Whether it's optimizing call routing, identifying high-intent leads, or recommending next-best actions for a customer journey, our agents are built to handle complexity and ambiguity.

What makes these agents truly powerful is their ability to orchestrate multi-step workflows. A single marketing objective might involve dozens of micro-decisions — analyzing call data, scoring lead quality, adjusting campaign budgets, triggering follow-up actions, and more. Our platform coordinates these steps, ensuring that agents work together seamlessly, hand off tasks at the right moments, and maintain alignment with overarching business goals. This orchestration layer enables us to tackle end-to-end processes rather than just isolated tasks.

To ensure our agents operate with real-world intelligence rather than abstract guesses, Invoca is building a context management layer that anchors every decision in the data environments that matter most to our customers.

This layer synthesizes the full breadth of Invoca’s industry understanding — from call transcripts and conversational signals to customer journey patterns and marketing performance data. It gives agents a living model of each business’s ecosystem: the taxonomy of its customers, the structure of its campaigns, the nuances of its products, and the intent signals that drive revenue.

Because agents reason within this rich context rather than relying on generic internet knowledge, their decisions reflect how customers actually behave in a given vertical, how marketers evaluate performance, and how conversations unfold in high-stakes buying moments.

This grounding transforms agents from general-purpose systems into domain-tuned operators capable of making precise, high-impact decisions. It’s what allows them to execute workflows with the same level of understanding a seasoned operator would bring, consistently and at scale.

The ultimate vision is to enable teams to move from human-in-the-loop systems to fully autonomous workflows that operate around business outcomes, whether that's increasing call conversion rates, improving lead quality, or enhancing customer experience. As we evolve this platform, we're not just automating tasks; we're redefining how work gets done at Invoca.

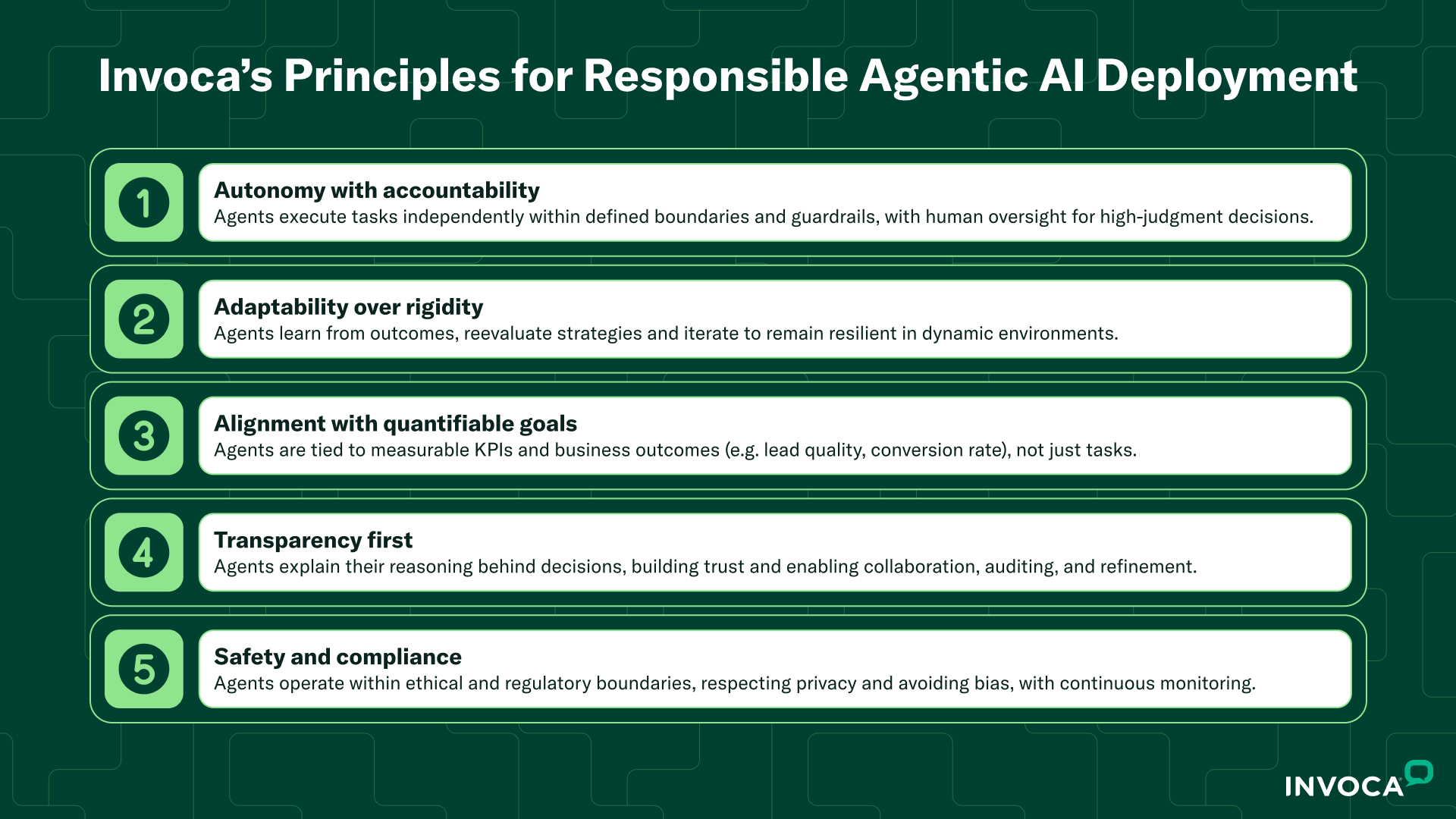

Principles for Responsible Agentic Deployment

Deploying agentic AI at scale isn't just a technical challenge; it's an organizational and ethical one. Agents that operate with autonomy carry real power, and with that power comes the responsibility to deploy them thoughtfully. At Invoca, we're guided by core principles that ensure our agents deliver value while maintaining trust, transparency, and alignment with business goals.

1. Autonomy with accountability

Agents should be empowered to execute tasks independently — that's the whole point. But autonomy doesn't mean a lack of oversight. We design our agents to operate within clearly defined boundaries, with guardrails that prevent them from taking actions that carry unacceptable risk. The goal is to let agents handle the heavy lifting so humans can focus on strategy, creativity, and high-judgment decisions. But humans remain accountable for outcomes, so we need visibility into what agents are doing and why.

2. Adaptability over rigidity

Next is adaptability over rigidity. Agentic AI must be designed to learn from outcomes and adjust its approach. If a strategy isn't working, the agent shouldn’t keep executing blindly; it must reevaluate and iterate. This adaptability is what makes agents resilient in dynamic business environments where customer behavior, market conditions, and competitive dynamics are constantly shifting.

3. Alignment with quantifiable goals

Goal alignment is equally critical. Agents should be tied to measurable KPIs and business outcomes, not abstract tasks. If an agent is optimizing ad spend, its success should be measured by lead quality and conversion rates, not just by the number of adjustments it made. This outcome-focused approach ensures that agents are working toward what actually matters, rather than optimizing for vanity metrics or getting lost in tactical execution. It also makes it easier to evaluate agent performance and understand their impact on the business.

4. Transparency first

Transparency is non-negotiable. When an agent makes a decision, stakeholders need to understand the reasoning behind it. This means agents should be able to explain their actions — not just what they did, but why. Transparency builds trust, enables better collaboration between humans and agents, and enables auditing and refining agent behavior over time. If an agent reallocates budget from one campaign to another, the marketing team should be able to see the rationale for the decision and verify that it aligns with their strategic priorities.

5. Safety and compliance

Finally, safety and compliance must be baked into every layer of agentic deployment. Agents need to operate within ethical and regulatory boundaries, respect privacy, avoid bias, and comply with legal requirements. This means implementing controls that prevent agents from taking actions that could harm customers, violate regulations, or damage the brand. It also means continuously monitoring agent behavior to ensure it stays aligned with company values and societal norms.

Together, these principles create a framework for deploying agentic AI responsibly. They ensure that agents not only work, but also work in ways that are trustworthy, explainable, and aligned with the business's long-term success.

As we continue to evolve our platform, these principles will guide every decision we make about how agents are designed, deployed, and managed.

The Next Chapter in AI

Agentic AI represents a structural shift in how organizations operate. As Invoca evolves its internal platform, teams will increasingly rely on autonomous systems to handle the heavy lifting, freeing humans to do the creative and strategic work that drives growth.

This is the next chapter — beyond predictive, beyond generative, into truly autonomous intelligence.